Neuromorphic hype

We all know there’s a difference between operating an Indica Diesel car and a WDP 4 diesel locomotive. The former has two cylinders and the latter 16. But that doesn’t mean the WDP 4 simply has eight times more components as the Indica. This is what comes to my mind when I come across articles that trumpet an achievement without paying any attention to its context.

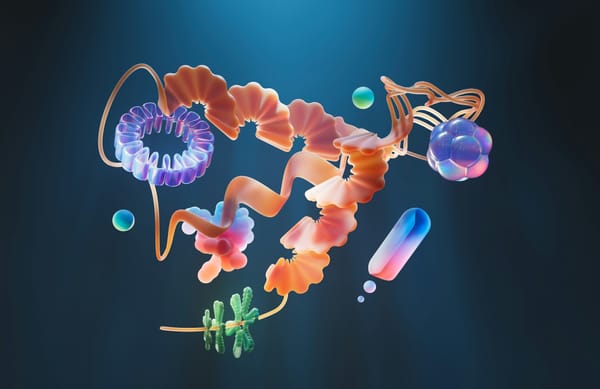

In an example from yesterday, IEEE Spectrum published an article with the headline ‘Nanowire Synapses 30,000x Faster Than Nature’s’. An artificial neural network is a network of small data-processing components called neurons. Once the neurons are fed data, they work together to analyse it and solve problems (like spotting the light from one star in a picture of a galaxy). The network also iteratively adjusts the connections between neurons, called synapses, so that the neurons cooperate more efficiently. The architecture and the process broadly mimic the way the human brain works, so they’re also collected under the label ‘neuromorphic computing’.

Now consider this excerpt:

“… a new superconducting photonic circuit … mimics the links between brain cells—burning just 0.3 percent of the energy of its human counterparts while operating some 30,000 times as fast. … the synapses are capable of [producing output signals at a rate] exceeding 10 million hertz while consuming roughly 33 attojoules of power per synaptic event (an attojoule is 10-18 of a joule). In contrast, human neurons have a maximum average [output] rate of about 340 hertz and consume roughly 10 femtojoules per synaptic event (a femtojoule is 10-15 of a joule).”

The article, however, skips the fact that the researchers operated only four circuit blocks in their experiment – while there are 86 billion neurons on average in the human brain working at the ‘lower’ efficiency. When such a large assemblage functions together, there are emergent problems that aren’t present when a smaller assemblage is at work, like removing heat and clearing cellular waste. (The human brain also contains “85 billion non-neuronal cells”, including the glial cells that support neurons.) The energy efficiency of the neurons must be seen in this context, instead of being directly compared to a bespoke laboratory setup.

Philip W. Anderson’s ‘more is different’ argument provides a more insightful argument against such reductive thinking. In a 1972 essay, Anderson, a theoretical physicist, wrote:

“The ability to reduce everything to simple fundamental laws does not imply the ability to start from those laws and reconstruct the universe. In fact, the more the elementary particle physicists tell us about the nature of the fundamental laws the less relevance they seem to have to the very real problems of the rest of science, much less to those of society.”

He contended that the constructionist hypothesis – that you can start from the first principles and arrive straightforwardly at a cutting-edge discovery in that field – “breaks down” because it can’t explain “the twin difficulties scale and complexity”. That is, things that operate on larger scale and with more individual parts are physically greater than the sum of those parts. (I like to think Anderson’s insight to be the spatial analogue of L.P. Hartley’s time-related statement of the same nature: “The past is a foreign country, they do things differently there.”)

So let’s not celebrate something because it’s “30,000x faster than” the same thing in nature – as the Spectrum article’s headline goes – but because it represents good innovation in and of itself. Indeed, the researchers who conducted the new study and are quoted in the article don’t make the comparison themselves but focus on the leap forward their innovation portends in the field of neuromorphic computing.

Faulty comparisons on the other hand could inflate readers’ expectations about what the outcomes of future innovation could be, and when it (almost) inevitably starts to fall behind nature’s achievements, those unmet expectations could seed disillusionment. We’ve already had this happen with quantum computing. Spectrum‘s choice could have been motivated by wanting to pique readers’ interest, which is a fair thing to aspire to, but it remains that the headline employed a clichéd comparison, with nature, instead of expending more effort and framing the idea right.